Inside the AI security conclave

Last week, Tim Van hamme, Blue41’s co-founder and CTO, attended a Dagstuhl Seminar on the Security and Privacy of Large Language Models. For those unfamiliar, Dagstuhl seminars are invite-only retreats that bring together leading computer science researchers in a secluded German castle to tackle the field’s most pressing challenges. Among the participants were representatives from Oxford, ETHZ, CMU, Cisco/Robust Intelligence, Bosch, Apple, IBM, Microsoft, …

The consensus among researchers from academia and industry was clear: LLMs will always be vulnerable to determined adversaries. As we explored last week, prompt injections aren’t a problem waiting for a solution—they’re a fundamental characteristic of how LLMs process text.

But here’s what emerged as the critical insight: we’ve been thinking about LLM security too narrowly.

|

|---|

| Dagstuhl participants in front of the castle |

Moving beyond the AI firewall paradigm

For years, the security community has fixated almost exclusively on what goes into the LLM (inputs) and what comes out (outputs). We build guardrails to filter prompts. We scan responses for sensitive data. We test for jailbreaks in isolation.

This input-output tunnel vision misses a fundamental truth: LLMs don’t operate in a vacuum. They’re embedded in applications—reading emails, accessing databases, calling APIs, transferring data, making decisions that affect real systems and real users.

To meaningfully discuss AI security, we must study these systems as a whole. Only then can we move beyond theoretical attacks and toward pragmatic defenses that work in production environments.

Two working groups, one message: context matters

The seminar broke into working groups to advance the field. Two stood out for their alignment with Blue41’s approach:

The Benchmarks Working Group wrestled with a critical question: How do we measure whether a new attack or defense actually works? The community needs realistic environments where AI applications can be deployed and tested—not just isolated LLM playgrounds. This requires aligning incentives to ensure up-to-date systems-level benchmarks that reflect real-world complexity.

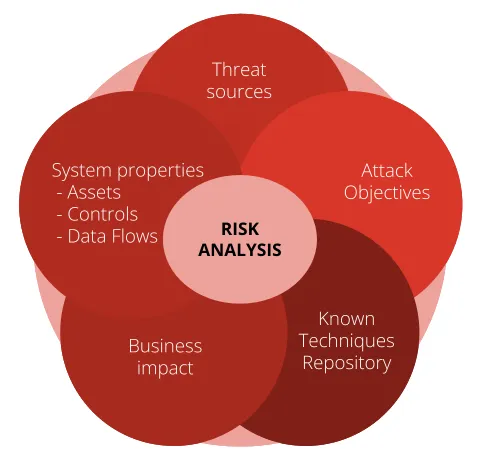

The Defenses Working Group tackled an even more fundamental challenge: before evaluating defenses, we need a framework for characterizing the threat surface. What are we actually defending against? Which defenses are most effective for which threats? What’s the cost-benefit tradeoff?

This is where our work on threat modeling for AI applications proved valuable to the discussion. We shared how we helped clients understand their unique attack surface and prioritize mitigations accordingly. Our approach was very well received and became the primary driver for the discussions.

|

|---|

| High level overview of the components required for characterizing the threat surface of an LLM-enabled application |

What AI builders must do (and how Blue41 helps)

The path forward requires three foundational capabilities:

1. Understand runtime behavior

You can’t secure what you don’t understand. Before implementing any defense, you need visibility into how your AI application actually behaves: which tools it calls, what data it accesses, how workflows execute under different conditions.

Blue41’s workflow graph provides exactly this visibility, automatically mapping your AI agent’s behavior patterns and surfacing the information essential for effective threat modeling.

|

|---|

| Example workflow graph: Insurance Assistant |

2. Enforce security best practices such as least privilege

Security fundamentals still apply—perhaps more than ever. AI agents shouldn’t have blanket access to every tool and data source. They should operate within well-defined boundaries.

Blue41’s behavioral profiling learns the normal execution patterns for each AI agent in your environment. When an agent attempts actions outside its typical behavior—accessing unusual data sources, calling unfamiliar APIs, or deviating from established workflows—the system flags it immediately. This effectively enforces least privilege without requiring you to manually define every permission boundary.

3. Enable human oversight and auditability

Automated detection is essential, but human oversight remains critical. When anomalies are detected, security teams need the ability to investigate: What exactly did the agent do? Why did it deviate from normal behavior? Was this a legitimate edge case or a security incident?

Blue41 provides complete audit trails of your AI agents’ behavior—every tool call, every data access, every decision point. When something suspicious occurs, you can trace the full execution path to understand what happened and why. This transparency is essential not just for incident response, but for compliance requirements and building trust with stakeholders who need assurance that AI systems are operating as intended.

4. Automate response actions

Manual response doesn’t scale. Once your behavioral profiles reach a sufficient level of trust, Blue41 enables you to automate containment and remediation actions. Terminate suspicious sessions automatically, block high-risk actions before they execute, update guardrails based on newly observed attack patterns, or trigger application-specific responses like taking down malicious endpoints.

This shifts your security posture from reactive to proactive—threats are contained before they cause damage, without waiting for human intervention.

Time to move beyond guardrails

Discussing these challenges with leading AI security researchers only reinforced what we’ve built Blue41 around: real AI security happens at the systems level. Guardrails will continue to fail. Prompt injections will persist. But by understanding how your AI applications behave and detecting when that behavior changes, you can defend against threats that don’t yet have names.

Ready to start defending your AI agents today? Book a short introduction with us.

We’d love to learn about your AI security challenges and show you how behavioral monitoring can protect your production environment.