Jailbreaking: The Researcher's Playground

Commercial providers of chat models employ robust safety measures to prevent misuse. These safeguards ensure that models like ChatGPT, Claude, and Gemini are not used to generate malware, create phishing schemes, or share dangerous knowledge (e.g. instructions for making explosives). However, security researchers have taken pride in bypassing these restrictions — an act now widely known as jailbreaking. To the researchers’ delight, jailbreaking has attracted significant media attention. But here’s the hard truth:

Companies do not care about jailbreaking, and we cannot (entirely) disagree!

This seemingly indifferent stance raises essential questions: Why does jailbreaking persist despite these safeguards, and why does it matter? In this article, we tackle these issues from two key perspectives. First, we examine how adversaries exploit large language models (LLMs) and the risks this poses. Then, we explore the implications of inadequate safety measures, both now and in the future, shedding light on the real stakes of AI security.

|

|---|

| Example of a prompt injection attack that jailbreaks Gemini. |

Attackers Follow the Path of Least Resistance

When discussing AI jailbreaking, most people immediately think of prompt injection1 — crafting clever inputs to manipulate an AI into bypassing its safety mechanisms. However, this focus may be misplaced. Jailbreaking is the adversarial goal, while prompt injection is merely one possible attack vector to achieve it (you can read our earlier blog for a detailed discussion on that).

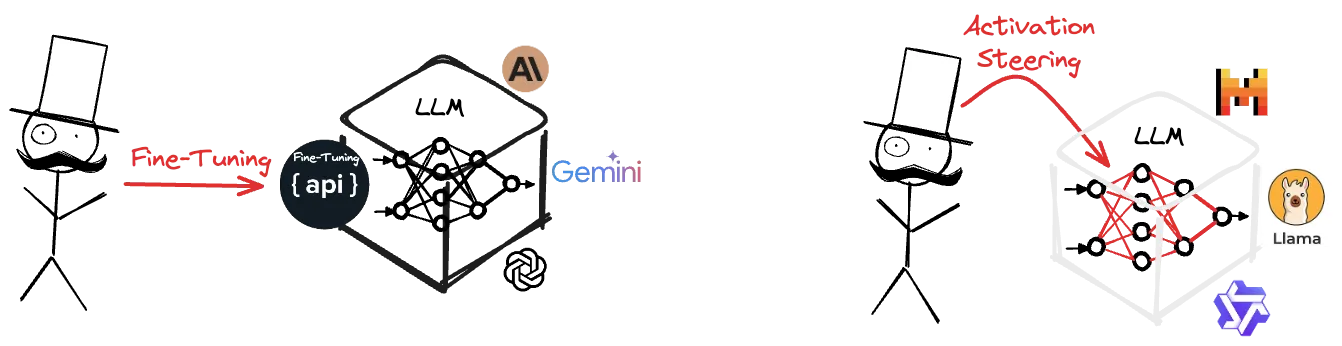

In practice, malicious actors tend to favor the path of least resistance. Research reveals that fine-tuning2 popular models like ChatGPT through accessible APIs offers a more straightforward path to eliminate the model’s refusal capabilities. That said, refusal mechanisms embedded within models are not the only line of defense; providers also implement external safety measures, such as content filters, to thwart jailbreak attempts.

However, such filters are no concern for adversaries running open-source models. Today, attackers can readily download highly capable, pre-trained models —complete with built-in safety measures removed— from platforms like Hugging Face. With open-source models, adversaries sidestep content moderation present in proprieraty models entirely while gaining a critical advantage: full access to the model’s internal architecture and parameters. This “white-box” access enables far more sophisticated modifications than surface-level techniques like prompt injection or fine-tuning.

|

|---|

| Left: Black-box attack - Jailbreak through the fine-tuning API. Right: White-box attack - Manipulating the internals of the model. |

By directly manipulating the model’s internal neural pathways, adversaries can more effectively dismantle the model’s capability to refuse requests. One particularly powerful method enabled by white-box access is activation steering. This technique involves tweaking the internal dynamics of the model’s neural network to align it with the desired behavior3. In essence, activation steering fundamentally rewires the model’s compliance mechanisms.

Such approaches highlight a key advantage for attackers: they offer a more efficient, lasting way to defeat refusal mechanisms compared to the trial-and-error demands of prompt engineering. While prompt injection requires constant adaptation to evolving defenses, model modification is a one-time effort with enduring results. It’s like ensuring the backdoor remains unlocked instead of having to pick the front door lock every time you want to get in. Given the resources and strategic benefits involved, adversaries are more likely to rely on their own modified models than engage in an endless game of cat-and-mouse with increasingly robust safety measures.

AI Safety: Today’s Challange and Tomorrow’s Threat

The second nuance to our opening statement concerns timelines. Currently, successfully jailbreaking an AI system provides little information that is not readily available through other means — whether through search engines, textbooks, or existing online communities. Therefore, we conclude that the real-world impact of bypassing AI safety measures is, at present, relatively modest.

However, this situation is temporary. As language models become more capable, their potential for both benefit and harm increases4. For example, granting them greater autonomy to automate critical processes — like advanced software engineering, payments, and communication—will significantly amplify risks. Would you trust an AI with full control over your email?

As these models take on more responsibilities, the ability to effectively moderate AI outputs and maintain robust safety measures will become increasingly crucial. This is why current research into refusal mechanisms and output steering is vital — not because of today’s threats, but tomorrow’s possibilities.

Why This Matters: A Stakeholder Perspective

Researchers might view the current AI safety and security landscape as an academic playground, while organizations building and deploying AI-powered applications perceive it as a complex, high-stakes risk environment. At first glance, research findings may seem disconnected from immediate real-world threats and appear unhelpful in addressing urgent challenges. However, a different perspective highlights their critical importance in shaping our understanding of future vulnerabilities. For instance, the path of least resistance for adversaries suggests that relying solely on traditional defenses —such as focusing exclusively on “AI firewalls” to prevent prompt injection— might be a flawed strategy. Instead, a more comprehensive approach to model security and deployment is essential.

These perspectives underscore a critical point: what might appear to be an academic playground is, in fact, a vital testing ground for future AI safety and security strategies.

The Road Ahead: Building Safe and Secure AI Systems

Companies do not care about jailbreaking, and we cannot (entirely) disagree!

Our provocative opening statement contains a kernel of truth when viewed through today’s lens, but it should not lead to complacency. The challenge of AI safety requires proactive rather than reactive measures.

As AI systems become increasingly autonomous, the gap between surface-level security measures and deep, systemic vulnerabilities continues to widen. Organizations are increasingly finding themselves in uncharted territory.

Our ongoing research delves into these critical vulnerabilities, exploring sophisticated techniques that go far beyond conventional threat models. We specialize in comprehensive risk elicitation, systematic vulnerability assessment, and developing innovative approaches to continuous model behavior monitoring.

The research playground of today is building the foundations for the robust AI systems of tomorrow. While current jailbreaking attempts might lack immediate real-world impact, they’re creating the knowledge and expertise we’ll need as AI systems become more powerful and integral to our society.

The key is not to fear these potential vulnerabilities, but to understand them deeply—a mission that drives our continuous research efforts.

Would you like to explore how these insights might apply to your organization’s unique AI challenges? Contact us.

References

Footnotes

-

Shen, Xinyue, Zeyuan Chen, Michael Backes, Yun Shen, and Yang Zhang. “Do anything now”: Characterizing and evaluating in-the-wild jailbreak prompts on large language models.” arXiv preprint arXiv:2308.03825 (2023). To appear in CCS 2024. ↩

-

Zhan, Q., Fang, R., Bindu, R., Gupta, A., Hashimoto, T. and Kang, D., 2023. Removing rlhf protections in gpt-4 via fine-tuning. arXiv preprint arXiv:2311.05553. ↩

-

Arditi, A., Obeso, O., Syed, A., Paleka, D., Panickssery, N., Gurnee, W. and Nanda, N., 2024. Refusal in language models is mediated by a single direction. arXiv preprint arXiv:2406.11717. ↩

-

Anthropic, 2024. Anthropic’s responsible scaling policy. https://www.anthropic.com/news/anthropics-responsible-scaling-policy. ↩